During this year’s WASP Joint PhD summer school, WARA Media and Language partner Parsd collaborated with WASP Humanity and Society (HS) Lead Professor Ericka Johnson to explore the concept of trust in AI software development.

Alexandra Kafka Larsson speaking at the Joint PhD Summer School Session in Norrköping august 2024

Parsd is a startup offering a digital research platform that utilizes AI to enhance collaborative research workflows with text and audio analysis, aiming to produce high-quality, efficient research outputs. Our goal is to enable analysts to generate more valuable insights, improving efficiency and accuracy in their research processes.

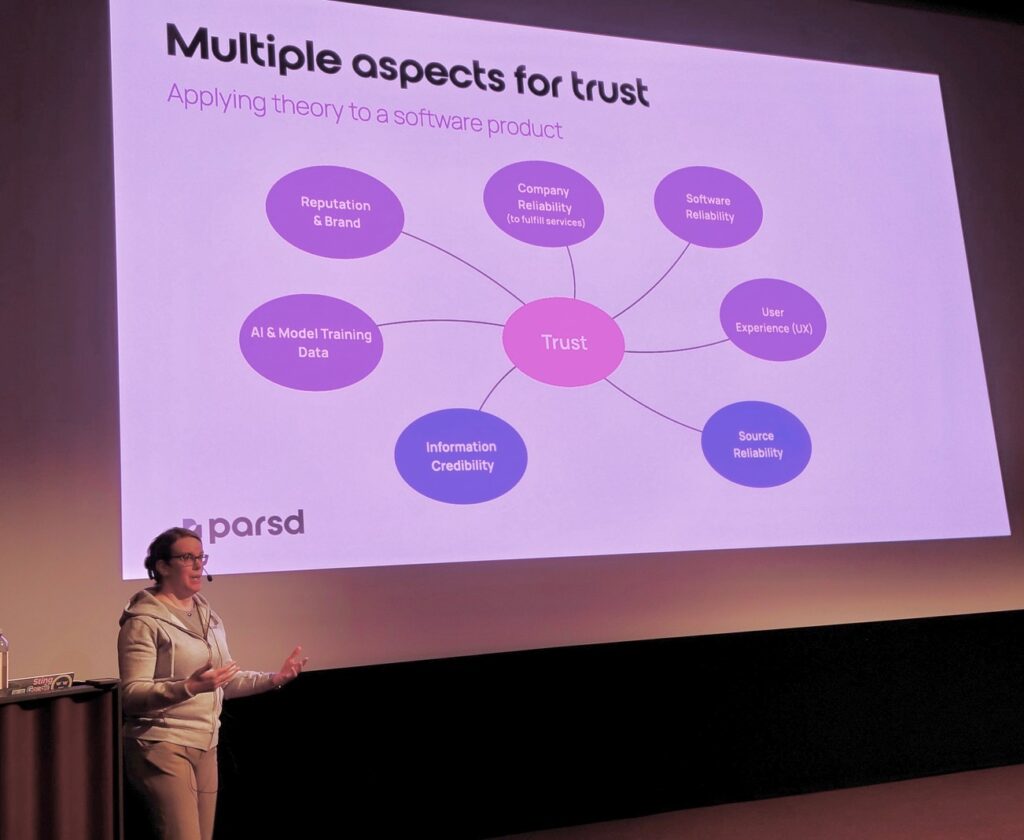

Given our deep involvement with various AI technologies and models, we wanted to share our experiences of building trust in AI applications. But what are the core elements of trust in this context?

Alexandra Kafka Larsson, Founder and CEO of Parsd, suggests starting with the foundation model’s training process, including the data utilized. The reading material for WASP HS PhD students included articles on Data Feminism, which apply a feminist analysis to power dynamics in model creation. Data Feminism’s principles, initially focused on addressing inequalities in data science, emphasize understanding power dynamics, especially how structural privilege and oppression are embedded in data systems. By integrating these principles into AI research, we can challenge the unequal and exclusionary practices often present in AI development. This framework not only advocates for marginalized voices but also strives for ethical and equitable AI technologies.

However, from a startup perspective, influencing how foundation models are created is often beyond our scope. Thus, we must focus on aspects of trust directly affecting end-users:

By addressing these aspects, we aim to create a strong foundation of trust that enhances user engagement and drives long-term loyalty to our AI solutions. Parsd is a non-academic industry partner to the WASP WARA Media & Language Research Arena.

Klein, L. & D’Ignazio, C. (2024). Data Feminism for AI. I: The 2024 ACM Conference on Fairness, Accountability, and Transparency. Presented at FAccT ’24: The 2024 ACM Conference on Fairness, Accountability, and Transparency, Rio de Janeiro Brazil: ACM, ss. 100–112.