Why Analysts Need More Than Just “Good Enough” When Professional Credibility Is at Stake

When we meet with customers, we often spend considerable time discussing trust. Not just one aspect of trust, but the multiple layers that together determine whether an analyst is willing to stake their professional reputation on AI-assisted insights.

Recently, we’ve seen high-profile cases—including a Swedish vice prime minister caught using a hallucinated quote in a major speech—that highlight why this conversation matters more than ever.

As someone who spent years providing intelligence briefings to military commanders, I know what it means to put your name on an analysis when decisions truly matter. With this backdrop, I want to explore what it takes to build trustworthy AI-powered analysis that analysts can confidently stand behind.

The Foundation: Legal and Compliance Trust

The conversation often starts with fundamental questions: What large language models do we use? Will their data be used for training?

“One of the main benefits of using a paid service instead of a free one is that you’re not required to let the provider train on your own data.”

This isn’t just about privacy—it’s about professional integrity. At Parsd, we ensure this is covered in legal agreements with our suppliers, and we handle privacy and copyright aspects as a sub-processor while customers remain data owners.

But compliance is just the entry point to trust. The real challenges lie deeper.

Beyond Compliance: Addressing AI Hallucination

People with AI experience have heard about models inventing facts or hallucinating. Initially, many used AI like Google—asking short keyword queries for commonly known facts. This worked for general knowledge but failed catastrophically with domain-specific concepts, where both facts and sources were often invented.

We’ve addressed this through several technical approaches:

- Retrieval-Augmented Generation (RAG) ensures the system searches your own data, using AI to analyze that specific content rather than relying solely on training data.

- Tool integration allows AI models to use search engines or databases for up-to-date responses without training data limitations.

- Knowledge Graphs store facts as linked objects—like “Stockholm is the capital of Sweden”—allowing AI models to verify important facts against structured databases rather than just searching text snippets.

“By having users bring their own data, we can provide ‘grounding’ for the answers AI provides.”

The Source Trust Challenge

This leads to the crucial question: which data sources are reliable enough to stake professional credibility on?

We’ve combined scientific practices from social sciences with intelligence tradecraft. Academic training teaches us to provide references to distinguish our ideas from others’ work. But references focus on content type—articles, books from respected publishers.

Intelligence work taught me to separate the publishing organization from the content itself. We rate organizations or individuals based on their interests, track record, and reliability. This means we can rate sources as both trusted and untrusted—crucial in a world plagued by disinformation.

“Source criticism is important but cumbersome. That’s why maintaining trust in known sources is equally important—we can focus criticism on new sources requiring assessment.”

The Swedish Internet Foundation emphasizes this approach: build a trust foundation of known sources, then focus source criticism on new, unassessed sources.

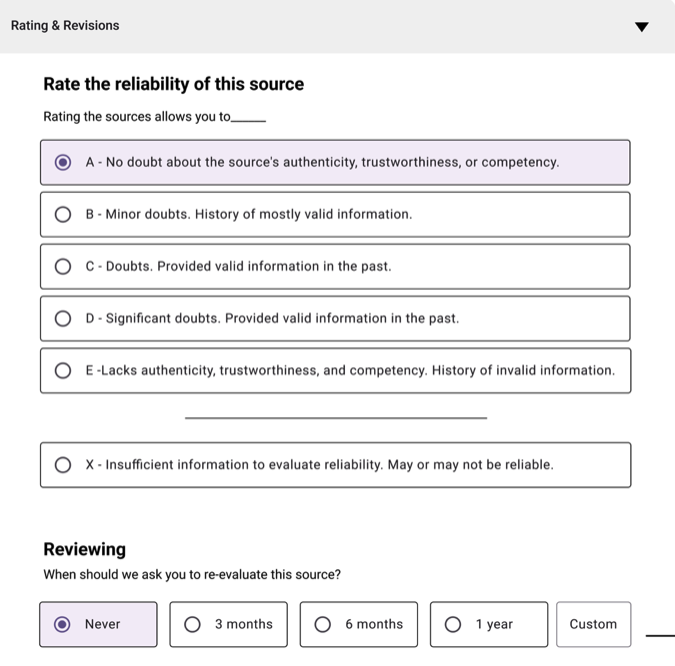

An example of Source Reliability rating in the Parsd Smart Digital Library

Digital Provenance: Linking Sources to Content

The next step involves linking sources with content files—PDFs, documents, web pages, and audio files. By connecting them, we can always present the source name with user-provided trust ratings alongside content.

This establishes a digital provenance chain for each piece of content. While efforts like Content Credentials from the Coalition for Content Provenance and Authenticity encode this into files themselves, we need easy-to-use mechanisms for managing trust-enhancing data.

“In a world where it’s harder to know what’s true or authentic, we’re focusing on maintaining curated datasets as our collective trust foundation.”

Authenticated Provenance: The User Identity Layer

The provenance chain must include transparency about the analyst’s identity. We’ve seen how anonymous social media accounts increase polarization where posters aren’t accountable.

To provide fact-based insights, we need users with authenticated, single accounts where we know their actual identity. This enables a process similar to scientific peer review—insights that can be scrutinized by others.

“To allow for proper scrutiny, we need not just digital provenance but authenticated provenance.”

For this, we require electronic ID registration. In Sweden, with 8.6 million people using BankID (99.9% of the 18-67 age group), this is actually the most frictionless login method. The EU’s standardization through the EU Wallet initiative suggests this will scale across Europe.

Beneficial Friction: Design Decisions That Matter

Designing software involves balancing ease of access with beneficial friction. Electronic ID requirements keep certain actors from collaborating on the platform—a feature, not a bug.

Source Management tools let analysts conduct regular source inventory scanning, reflecting on their data collection patterns. Some increase source diversity when they visualize their current sources.

“We introduce some friction that will be beneficial for all users in the long run.”

The visualization of source diversity often drives analysts to broaden their perspectives—a natural quality improvement mechanism.

The Curated Future

We seem to have no choice but to bet on a curated future, building valuable sets of fact-based insights for when things truly matter. With methods rooted in scientific methodology, analysts can be proud to put their names on insights that are carefully crafted yet productively enhanced by responsible AI use.

“Let’s show the world that AI software can be used as a force for good, not just providing quick answers without reflection.”

The recent example of the Swedish vice prime minister’s hallucinated quote serves as a stark reminder: we must make it easy to follow sound methods that provide a counter-force to disinformation.

What This Means for Organizations

As more analysts adopt authenticated provenance and responsible AI use, organizations need to understand that trustworthy analysis requires investment in proper infrastructure, training, and methodology.

The question isn’t whether to use AI in analysis—it’s whether to use it responsibly with proper safeguards, or risk the kind of public embarrassment that comes from hallucinated quotes in public speeches.

The invisible profession of analysis deserves tools that match the stakes of their work. When your name is on the line, good enough isn’t good enough.

What affects how you trust AI-powered software? How do you balance productivity gains with professional accountability in youir analytical work?

Alexandra Kafka Larsson is the CEO and Co-founder of Parsd, a digital research platform that helps analysts create trusted insights. She previously served as a military intelligence officer in the Swedish Air Force and has over 30 years of experience in intelligence systems and methods.